Accessing Historical Weather Data with Dark Sky API

Importing Past Weather Data

Photo taken by me last summer in Majuro

Photo taken by me last summer in Majuro

In my IoT class last year with Jay Ham we used a website called Dark Sky to get current weather conditions. I’ve been thinking about this recently, since I would like to see if I can match up weather conditions to the changes in the depth to water of wells at a site. I was inspired to look into this based on a talk from Jonathan Kennel from the Univesity of Guelph ( Happy Canada Day! ) and several conversations with my advisor.

I’ll walk through how I imported the data to R.

Dark Sky is a great resource, however, when I went to re-visit the website I found that they have joined Apple. This means they are no longer creating new accounts. Luckily I already had one from my class last fall. The API is still supported through at least the end of 2021. Later I’ll mention some ways that you could get similar (maybe better) data through other channels.

The API allows up to 1000 calls per day for free. Using the Time Machine you can request data from a certain date and location. I focused on hourly data, though it’s probably finer resolution than I need.

“The hourly data block will contain data points starting at midnight (local time) of the day requested, and continuing until midnight (local time) of the following day.”

The docs include a sample API call, which includes your key, the location, and the date requested.

GET https://api.darksky.net/forecast/0123456789abcdef9876543210fedcba/42.3601,-71.0589,255657600?exclude=currently,flags

A quick visit to my best friend stack overflow provided a little more clarity about how to use the API in R. The date is in UNIX format. I wanted to start at January 1, 2000, so I used a handy UNIX converter to find my desired date number, 946684800. I replaced the url and now I’m ready to call the API.

#GET the url

req <- httr::GET("https://api.darksky.net/forecast/{key}/30.012188,-94.024525,946684800?exclude=currently,flags")

req$status_code

# should be 200 if it worked. If you get 400, something has gone wrong.

# extract req$content

cont <- req$content

#Convert to char

char <- rawToChar(req$content)

#Convert to df

df <- jsonlite::fromJSON(char)It worked! I removed my private API key, so you’ll have to take my word for it. But now I have a new problem - the call only works for one day at a time. I want a lot of days, so I decided to write a loop.

One thing I couldn’t get to work was changing the date inside the string for the API url. I posted in the R for Data Science Slack, and a few minutes later I learned a handy new trick - you can put a variable inside a string by just inserting it with quotes around the variable. Something like this:

"https://api.darksky.net/forecast/{key}/30.012188,-94.024525,",day,"?exclude=currently,flags"

Great! I ran the loop and it worked, kinda. It errored out after the first run because rbind could not combine two data frames with different numbers of columns. After looking at the next few days to see which columns were off I saw that it went from 15 to 16 to 17, then back down. Very annoying! They must have added some new info for some days, but this made the data inconsistent so I had to add a select function to the loop. I selected for the 15 columns that were consistent across all days and ran it again. Success!

Here’s the code I ended up with:

library(dplyr)

#initialize all_hours so there's something to rbind to

df <- jsonlite::fromJSON(paste0("https://api.darksky.net/forecast/{key}/30.012188,-94.024525,946684800?exclude=currently,flags"))

all_hours <- df$hourly$data

# make a vector of unix dates I want (minus the first one, which I already put in all_hours)

unix_day <- seq(946771200, 1033084800, by=86400)

for (day in unix_day){

df <- jsonlite::fromJSON(paste0("https://api.darksky.net/forecast/{key}/30.012188,-94.024525,",

day,

"?exclude=currently,flags"))

hourly <- select(df$hourly$data, c(cols))

all_hours <- rbind(hourly, all_hours)}

#convert unix time to date

all_hours$time <- as.POSIXct(all_hours$time, origin="1970-01-01")I selected the columns I want and saved them as weather.csv. Let’s zoom in to two rainy days in May 2000.

library(ggplot2)

weather <- read.csv("weather.csv")

#weather <- weather[2810:2845,]

#convert unix time to date

weather$time <- as.POSIXct(weather$time, origin="1970-01-01")

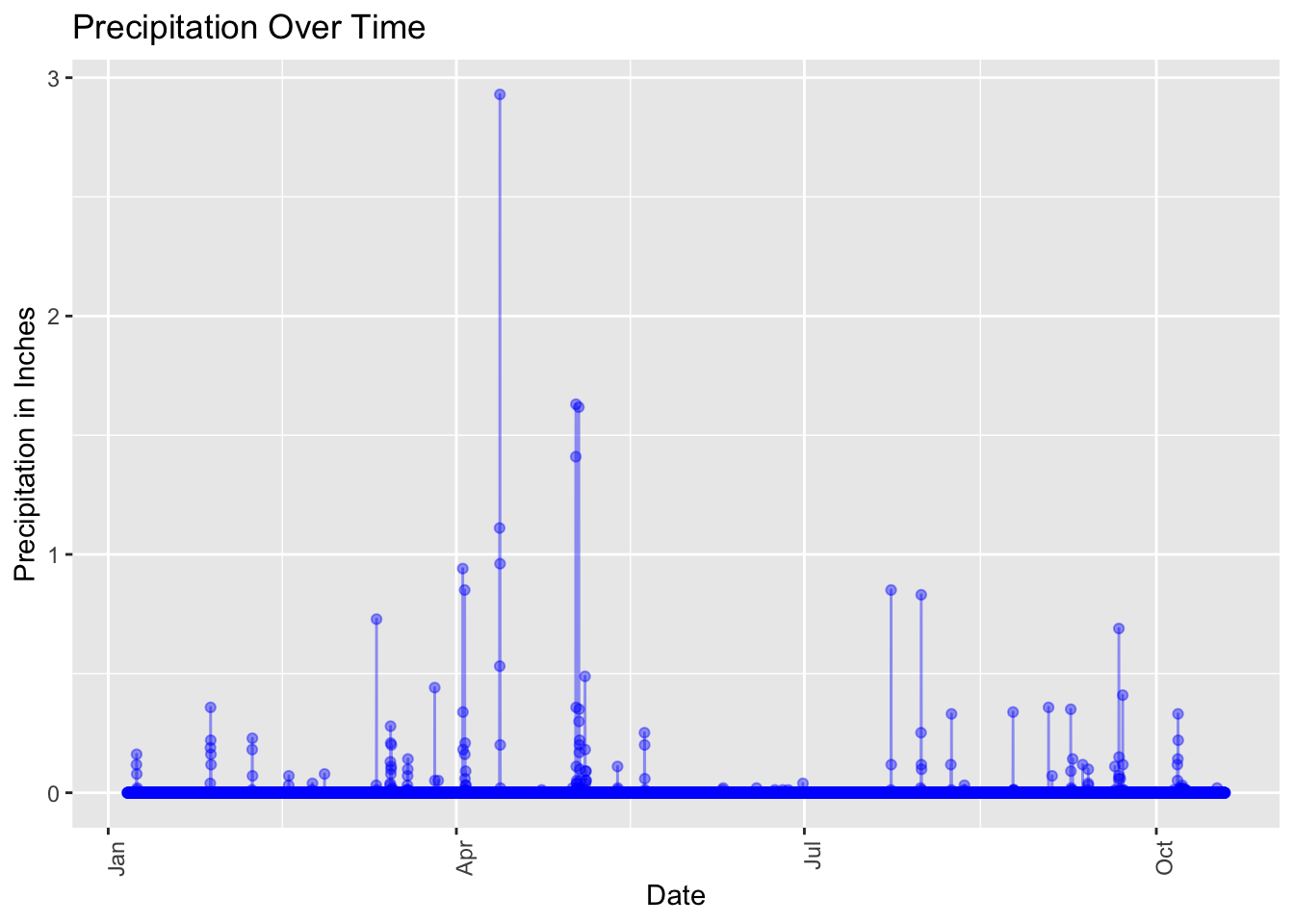

ggplot(weather, aes(x = time, y = precipIntensity, group =1)) +

geom_point(alpha = 0.4, color = "blue") +

geom_line(alpha = 0.4, color = "blue", size = 0.5) +

theme_gray() +

theme(axis.text.x=element_text(angle=90, hjust=1)) +

labs(title ="Precipitation Over Time", x = "Date", y = "Precipitation in Inches")

You can clearly see the precipitation-generating storm events over a few months.

It will take me a few days of using my 1000 free API calls to cover the period I’m interested in, but overall it was really easy.

Other Weather Options

Clearly getting historical weather data for a certain location can be really useful, but since Dark Sky is no longer creating accounts it’s not a very practical resource.

The helpful commenters on the R for Data Science Slack also suggested using NOAA to get the data. This is probably a more robust dataset anyway, but the downside is that they don’t have the option to use lat/long as a location - you have to pick from one of their existing stations. In my case there wasn’t one near the field site I was interested in, but they’re spread out across the country so I bet it will be a great source for a lot of people. There’s a good tutorial on accessing this data at R Open Sci.

I’ve also hear the Weather Underground has a good API, but it looks like you need to contribute weather data with an IoT device to access it. Cool! But may not be useful to some.

Are there any other sources of weather data that you know of? Do you have suggestions to improve the approach I used for the Dark Sky data?